Introduction#

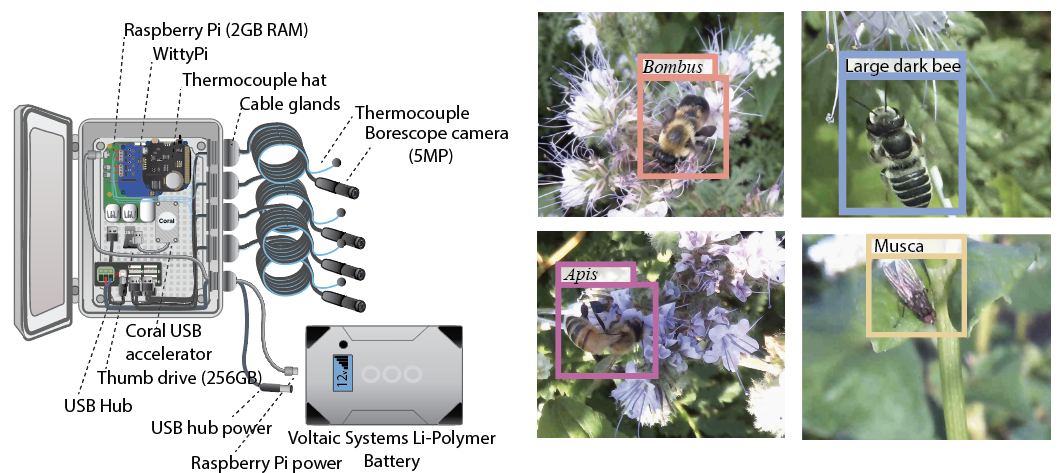

Welcome to the Autopolls Documentation Autopolls is an adaptable open–source camera trap designed to automatically detect insects in natural settings. The system is build off commerically available parts to empathesize accessibility and modularity. The core components consist of a Raspberry Pi connected to four USB Borescope Cameras that allow for continous monitoring of an environment. The Raspberry Pi runs inference on streamed images using an object detection model on the device that can be tuned for an insect (or animal) of interest.

When the object detection model is running, the user can choose to add a confidence threshold for saving images and detections. The user can also turn off on-device inference and turn the system into a timelapse system at which one can set the interval of time between saving images.

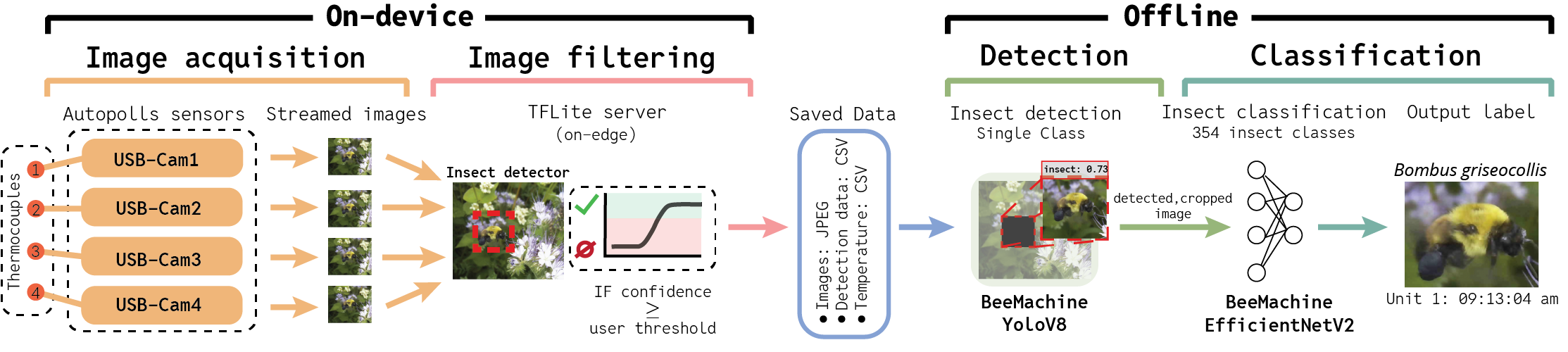

The AutoPollS workflow consists of four core components:

Image Acquisition

The AutoPollS unit can be deployed in field conditions to automatically ingest images at set frequencies. The default configuration features 4x borescope cameras. Each camera can be paired to a thermocouple probe if the thermocouple HAT is attached with corresponding probes installed.

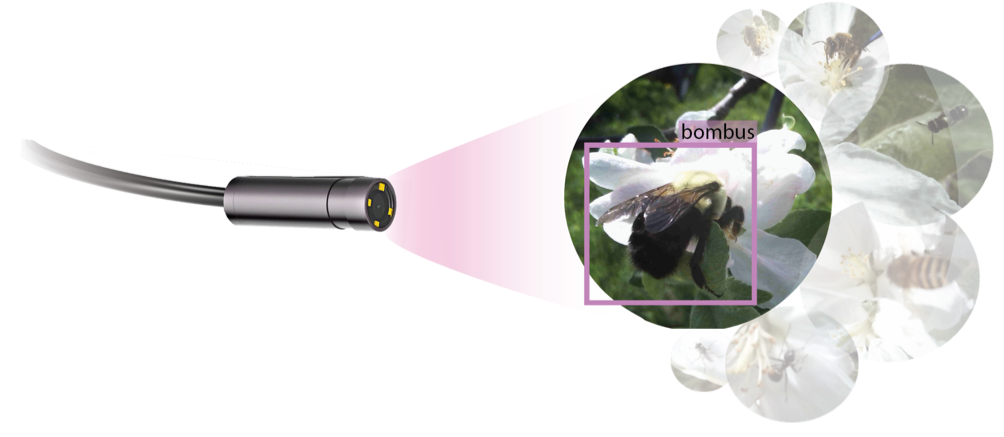

Image Filtering

On the device a deep learning based object detection model is used to filter images for the object (insect) of interest. The AutoPollS unit uses the BeeMachine model developed in the Spiesman lab and converted to an edge optimized architecture (YOLOv8n) in Tensorflow Lite. After an image is passed to the on device model, the model will output a confidence score estimating how likely the contents are the object of interest or not. This confidence is used as a threshold to save or discard an image.

By lowering this threshold the AutoPollS device will save images that are less likely to be the object of interest (save more images), increasing will save images that have a high liklihood of having the object of interest.

Offline Detection

After collecting and saving images they are passed to an offline, high accuracy, GPU assisted object detection model. This further processing will

increase the signal to noise in the dataset and remove significant number of images without insects present.

This can be run via Google Colab or a local machine with GPU access.

Offline Classification

a. The final step of the AutoPollS pipeline is to add a classification label to the remaining images. The results of the Offline Detection step will feature bounding boxes, localizing the object within each image. These are used to create cropped images that are passed to the classification model for analysis. The classification model used is from the BeeMachine model.

The results of this pipeline will be a CSV featuring columns for: filepath, bounding box regions, bounding box confidence, classification label, classification confidence. There is the option to integrate the temperature recordings into this data structure as well to have each observation paired with the local temperature recording.